Brain dump on the diversity of AI risk

AI has the power to change the world in both wonderful and terrible ways. We should try to make the wonderful outcomes more likely than the terrible ones. Towards that end, here is a brain dump of my thoughts about how AI might go wrong, in rough outline form. I am not the first person to have any of these thoughts, but collecting and structuring these risks was useful for me. Hopefully reading them will be useful for you.

My top fears include targeted manipulation of humans, autonomous weapons, massive job loss, AI-enabled surveillance and subjugation, widespread failure of societal mechanisms, extreme concentration of power, and loss of human control.

I want to emphasize -- I expect AI to lead to far more good than harm, but part of achieving that is thinking carefully about risk.

# Warmup: Future AI capabilities and evaluating risk

1. Over the last several years, AI has developed remarkable new capabilities. These include [writing software](https://github.com/features/copilot), [writing essays](https://www.nytimes.com/2023/08/24/technology/how-schools-can-survive-and-maybe-even-thrive-with-ai-this-fall.html), [passing the bar exam](https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4389233), [generating realistic images](https://imagen.research.google/), [predicting how proteins will fold](https://www.deepmind.com/research/highlighted-research/alphafold), and [drawing unicorns in TikZ](https://arxiv.org/abs/2303.12712). (The last one is only slightly tongue in cheek. Controlling 2d images after being trained only on text is impressive.)

1. AI will continue to develop remarkable new capabilities.

* Humans aren't irreplicable. There is no fundamental barrier to creating machines that can accomplish anything a group of humans can accomplish (excluding tasks that rely in their definition on being performed by a human).

* For intellectual work, AI will become cheaper and faster than humans

* For physical work, we are likely to see a sudden transition, from expensive robots that do narrow things in very specific situations, to cheap robots that can be repurposed to do many things.

* The more capable and adaptable the software controlling a robot is, the cheaper, less reliable, and less well calibrated the sensors, actuators, and body need to be.

* Scaling laws teach us that AI models can be improved by scaling up training data. I expect a virtuous cycle where somewhat general robots become capable enough to be widely deployed, enabling collection of much larger-scale diverse robotics data, leading to more capable robots.

* The timeline for broadly human-level capabilities is hard to [predict](https://bounded-regret.ghost.io/scoring-ml-forecasts-for-2023/). My guess is more than 4 years and less than 40.

* AI will do things that no human can do.

* Operate faster than humans.

* Repeat the same complex operation many times in a consistent and reliable way.

* Tap into broader capabilities than any single human can tap into. e.g. the same model can [pass a medical exam](https://arxiv.org/abs/2303.13375), answer questions about [physics](https://benathi.github.io/blogs/2023-03/gpt4-physics-olympiad/) and [cosmology](https://www.linkedin.com/pulse/asking-gpt-4-cosmology-gabriel-altay/), [perform mathematical reasoning](https://blog.research.google/2022/06/minerva-solving-quantitative-reasoning.html?m=1), read [every human language](https://www.reddit.com/r/OpenAI/comments/13hvqfr/native_bilinguals_is_gpt4_equally_as_impressive/) ... and make unexpected connections between these fields.

* Go deeper in a narrow area of expertise than a human could. e.g. an AI can read every email and calendar event you've ever received, web page you've looked at, and book you've read, and remind you of past context whenever anything -- person, topic, place -- comes up that's related to your past experience. Even the most dedicated personal human assistant would be unable to achieve the same degree of familiarity.

* Share knowledge or capabilities directly, without going through a slow and costly teaching process. If an AI model gains a skill, that skill can be shared by copying the model's parameters. Humans are unable to gain new skills by copying patterns of neural connectivity from each other.

1. AI capabilities will have profound effects on the world.

* Those effects have the possibility of being wonderful, terrible, or (most likely) some complicated mixture of the two.

* There is not going to be just one consequence from advanced AI. AI will produce lots of different profound side effects, **all at once**. The fears below should not be considered as competing scenarios. You should rather imagine the chaos that will occur when variants of many of the below fears materialize simultaneously. (see the concept of [polycrisis](https://www.weforum.org/agenda/2023/03/polycrisis-adam-tooze-historian-explains/))

1. When deciding what AI risks to focus on, we should evaluate:

* **probability:** How likely are the events that lead to this risk?

* **severity:** If this risk occurs, how large is the resulting harm? (Different people will assign different severities based on different value systems. This is OK. I expect better outcomes if different groups focus on different types of risk.)

* **cascading consequences:** Near-future AI risks could lead to the disruption of the social and institutional structures that enable us to take concerted rational action. If this risk occurs, how will it impact our ability to handle later AI risks?

* **comparative advantage:** What skills or resources do I have that give me unusual leverage to understand or mitigate this particular risk?

1. We should take *social disruption* seriously as a negative outcome. This can be far worse than partisans having unhinged arguments in the media. If the mechanisms of society are truly disrupted, we should expect outcomes like violent crime, kidnapping, fascism, war, rampant addiction, and unreliable access to essentials like food, electricity, communication, and firefighters.

1. Mitigating most AI-related risks involves tackling a complex mess of overlapping social, commercial, economic, religious, political, geopolitical, and technical challenges. I come from an ML science + engineering background, and I am going to focus on suggesting mitigations in the areas where I have expertise. *We desperately need people with diverse interdisciplinary backgrounds working on non-technical mitigations for AI risk.*

# Specific risks and harms stemming from AI

1. The capabilities and limitations of present day AI are already causing or exacerbating harms.

* Harms include: generating socially biased results; generating (or failing to recognize) toxic content; generating bullshit and lies (current large language models are poorly grounded in the truth even when used and created with the best intents); causing addiction and radicalization (through gamification and addictive recommender systems).

* These AI behaviors are already damaging lives. e.g. see the use of racially biased ML to [recommend criminal sentencing](https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing)

* I am not going to focus on this class of risk, despite its importance. These risks are already a topic of research and concern, though more resources are needed. I am going to focus on future risks, where less work is (mostly) being done towards mitigations.

1. AI will do most jobs that are currently done by humans.

* This is likely to lead to massive unemployment.

* This is likely to lead to massive social disruption.

* I'm unsure in what order jobs will be supplanted. The tasks that are hard or easy for an AI are different than the tasks that are hard or easy for a person. We have terrible intuition for this difference.

* Five years ago I would have guessed that generating commissioned art from a description would be one of the last, rather than one of the first, human tasks to be automated.

* Most human jobs involve a diversity of skills. We should expect many jobs to [transform as parts of them are automated, before they disappear](https://www.journals.uchicago.edu/doi/full/10.1086/718327).

* Most of the mitigations for job loss are social and political.

* [Universal basic income](https://en.wikipedia.org/wiki/Universal_basic_income).

* Technical mitigations:

* Favor research and product directions that seem likely to be more complementary and enabling, and less competitive, with human job roles. Almost everything will have a little of both characters ... but the balance between enabling vs. competing with humans is a question we should be explicitly thinking about when we choose projects.

1. AI will enable extremely effective targeted manipulation of humans.

* Twitter/X currently uses *primitive* machine learning models, and chooses a sequence of *pre-existing* posts to show me. This is enough to make me spend hours slowly scrolling a screen with my finger, receiving little value in return.

* Future AI will be able to dynamically generate the text, audio, and video stimuli which is predicted to be most compelling to me personally, based upon the record of my past online interactions.

* Stimuli may be designed to:

* cause addictive behavior, such as compulsive app use

* promote a political agenda

* promote a religious agenda

* promote a commercial agenda -- advertising superstimuli

* Thought experiments

* Have you ever met someone, and had an instant butterfly-in-the-stomach can't-quite-breathe feeling of attraction? Imagine if every time you load a website, there is someone who makes specifically you feel that way, telling you to drink coca-cola.

* Have you ever found yourself obsessively playing an online game, or obsessively scrolling a social network or news source? Imagine if the intermittent rewards were generated based upon a model of your mental state, to be as addictive as possible to your specific brain at that specific moment in time.

* Have you ever crafted an opinion to try to please your peers? Imagine that same dynamic, but where the peer feedback is artificial and chosen by an advertiser.

* Have you ever listened to music, or looked at art, or read a passage of text, and felt like it was created just for you, and touched something deep in your identity? Imagine if every political ad made you feel that way.

* I believe the social effects of this will be much, much more powerful and qualitatively different than current online manipulation. (*"[More is different](https://www.jstor.org/stable/pdf/1734697.pdf?casa_token=GDThS0md5IsAAAAA:cnx_fNDcb477G6-zU5qu0qC1tbKmgAhnIj_QecjFNwwYi3pge7vEWiaxIm4mAJqsatKbKnyMu-6ettZAtUDxysDPeFzAM736jpKJq-alTnjB4kCBAFrX3g)"*, or *"quantity has a quality all its own"*, depending on whether you prefer to quote P.W. Anderson or Stalin)

* If our opinions and behavior are controlled by whomever pipes stimuli to us, then it breaks many of the basic mechanisms of democracy. Objective truth and grounding in reality will be increasingly irrelevant to societal decisions.

* If the addictive potential of generated media is similar to or greater than that of hard drugs ... there are going to be a lot of addicts.

* Class divides will grow worse, between people that are privileged enough to protect themselves from manipulative content, and those that are not.

* Feelings of emotional connection or beauty may become vacuous, as they are mass produced. (see [parasocial relationships](https://en.wikipedia.org/wiki/Parasocial_interaction) for a less targeted present day example)

* non-technical mitigations:

* Advocate for laws that restrict stimuli and interaction dynamics which produce anomalous effects on human behavior.

* Forbid apps on the Google or Apple storefront that produce anomalous effects on human behavior. (this will include forbidding extremely addictive apps -- so may be difficult to achieve given incentives)

* Technical mitigations:

* Develop tools to identify stimuli which will produce anomalous effects on human behavior, or anomalous affective response.

* Protective filter: Develop models that rewrite stimuli (text or images or other modalities) to contain the same denoted information, but without the associated manipulative subtext. That is, rewrite stimuli to contain the parts you want to experience, but remove aspects which would make you behave in a strange way.

* Study the ways in which human behavior and/or perception can be manipulated by optimizing stimuli, to better understand the problem.

* I have done some work -- in a collaboration led by Gamaleldin Elsayed -- where we showed that adversarial attacks which cause image models to make incorrect predictions also bias the perception of human beings, even when the attacks are nearly imperceptible. See the Nature Communications paper [*Subtle adversarial image manipulations influence both human and machine perception*](https://www.nature.com/articles/s41467-023-40499-0).

* Research scaling laws between model size, training compute, training data from an individual and from a population, and ability to influence a human.

1. AI will enable new weapons and new types of violence.

* Autonomous weapons, i.e. weapons that can fight on their own, without requiring human controllers on the battlefield.

* Autonomous weapons are difficult to attribute to a responsible group. No one can prove whose drones committed an assassination or an invasion. We should expect increases in deniable anonymous violence.

* Removal of social cost of war -- if you invade a country with robots, none of your citizens die, and none of them see atrocities. Domestic politics may become more accepting of war.

* Development of new weapons

* e.g. new biological, chemical, cyber, or robotic weapons

* AI will enable these weapons to be made more capable + deadly than if they were created solely by humans.

* AI may lower the barriers to access, so smaller + less resourced groups can make them.

* Technical mitigations:

* Be extremely cautious of doing research which is dual use. Think carefully about potential violent or harmful applications of a capability, during the research process.

* When training and releasing models, include safeguards to prevent them being used for violent purposes. e.g. large language models should refuse to provide instructions for building weapons. Protein/DNA/chemical design models should refuse to design molecules which match characteristics of bio-weapons. This should be integrated as much as possible into the entire training process, rather than tacked on via fine-tuning.

1. AI will enable qualitatively new kinds of surveillance and social control.

* AI will have the ability to simultaneously monitor all electronic communications (email, chat, web browsing, ...), cameras, and microphones in a society. It will be able to use that data to build a personalized model of the likely motivations, beliefs, and actions of every single person. Actionable intelligence on this scale, and with this degree of personalization, is different from anything previously possible.

* This domestic surveillance data will be useful and extremely tempting even in societies which aren't currently authoritarian. e.g. detailed surveillance data could be used to prevent crime, stop domestic abuse, watch for the sale of illegal drugs, or track health crises.

* Once a society starts using this class of technology, it will be difficult to seek political change. Organized movements will be transparent to whoever controls the surveillance technology. Behavior that is considered undesirable will be easily policed.

* This class of data can be used for commercial as well as political ends. The products that are offered to you may become hyper-specialized. The jobs that are offered to you may become hyper-specific and narrowly scoped. This may have negative effects on social mobility, and on personal growth and exploration.

* Political mitigations:

* Offer jobs in the US to all the AI researchers in oppressive regimes!! We currently make it hard for world class talent from countries with which we have a bad relationship to immigrate. We should instead be making it easy for the talent to defect.

* Technical mitigations:

* Don't design the technologies that are obviously best suited for a panopticon.

* Can we design behavioral patterns that are adversarial examples, and will mislead surveillance technology?

* Can we use techniques e.g. from differential privacy to technically limit the types of information available in aggregated surveillance data?

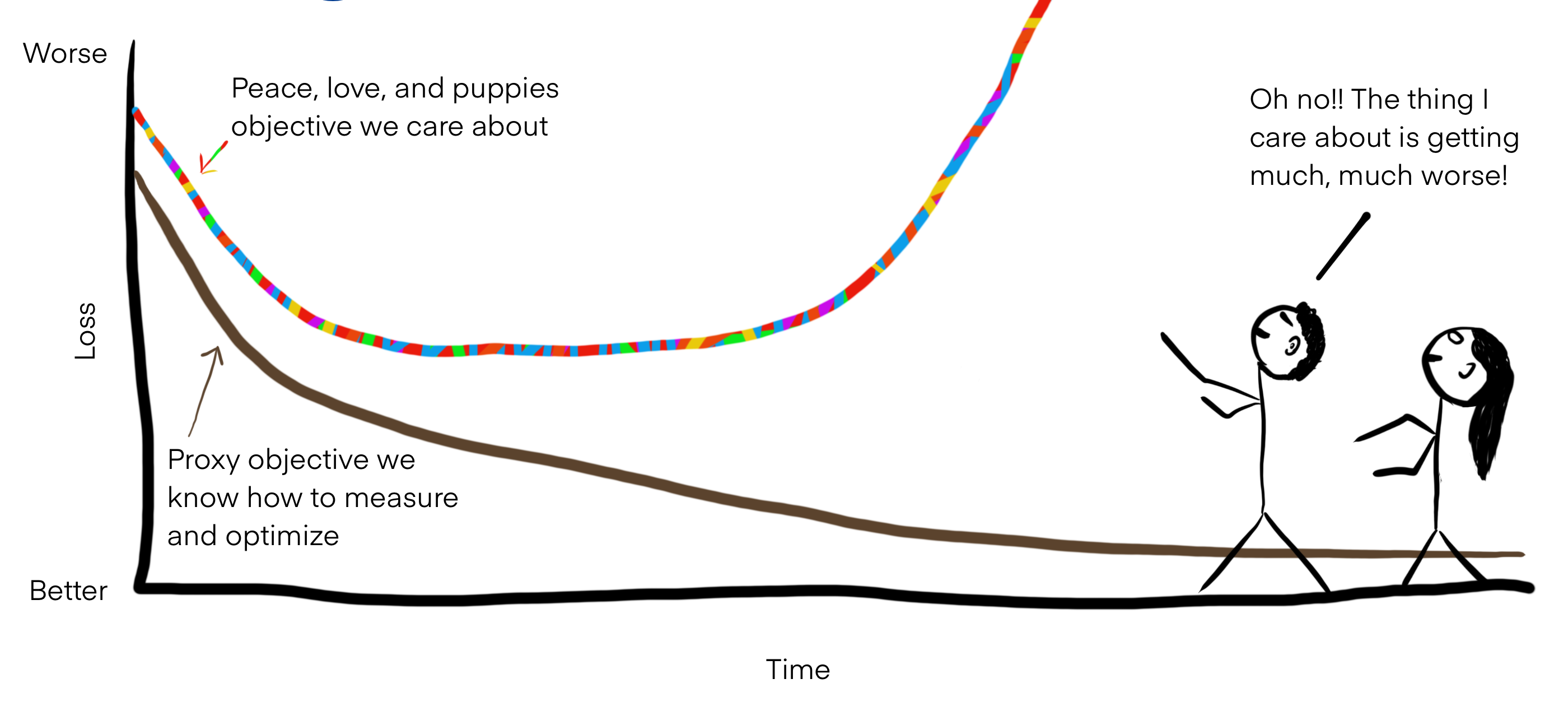

1. AI will catalyze failure of societal mechanisms through increased efficiency. I wrote a [blog post on this class of risk](https://sohl-dickstein.github.io/2022/11/06/strong-Goodhart.html).

BibTeX entry for post:

@misc{sohldickstein20230910,

author = {Sohl-Dickstein, Jascha},

title = {{ Brain dump on the diversity of AI risk }},

howpublished = "\url{https://sohl-dickstein.github.io/2023/09/10/diversity-ai-risk.html}",

date = {2023-09-10}

}

@misc{sohldickstein20230910,

author = {Sohl-Dickstein, Jascha},

title = {{ Brain dump on the diversity of AI risk }},

howpublished = "\url{https://sohl-dickstein.github.io/2023/09/10/diversity-ai-risk.html}",

date = {2023-09-10}

}